Robots Exclusions

Flare Online supports a robots.txt file that disallows web crawling on a single site. The file is auto-generated based on the sites setting option "Include in search engines." When you disable this option, the server adds the site URL to a robots.txt file, which is generated by the server. This robots.txt file specifies that searches for content in that URL should be disallowed.

You can customize the robots.txt file by defining it with your own robots exclusions. You can either make changes to the existing robots.txt file, or you can replace the file with a new one.

Warning Editing a robots exclusion file is a capability for an advanced user. Incorrect robots exclusion rules can result in excluding content from search engines. You might need to involve an individual in your company, such as a system administrator, to verify your rules are correct.

[Menu Proxy — Headings — Online — Depth3 ]

Permission Required?

For this activity, you must have the following permission setting:

For more information about permissions, see Setting User Permissions or Setting Team Permissions.

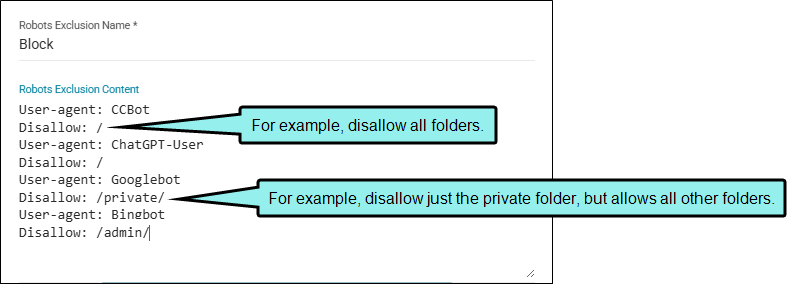

How to Create a Robots Exclusions File

- On the left side of the interface, click Sites.

- Select the Security tab at the top.

- Select the Robots Exclusions tab in the left panel.

- In the toolbar, click

.

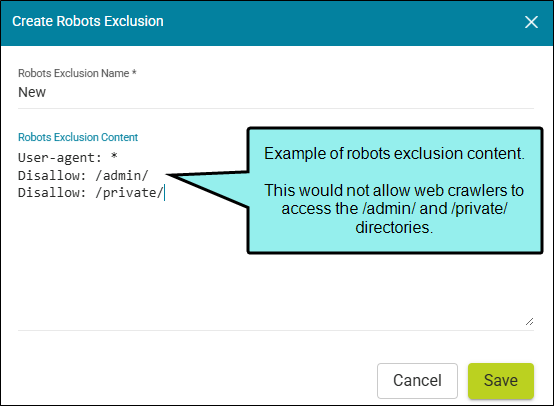

. - In the Create Robots Exclusion dialog, enter a name.

-

In the Robots Exclusion Content text box, define rules for the file. (The file you create does not include default values, so if you don't enter anything it will be blank. But you can add content after it's created.)

-

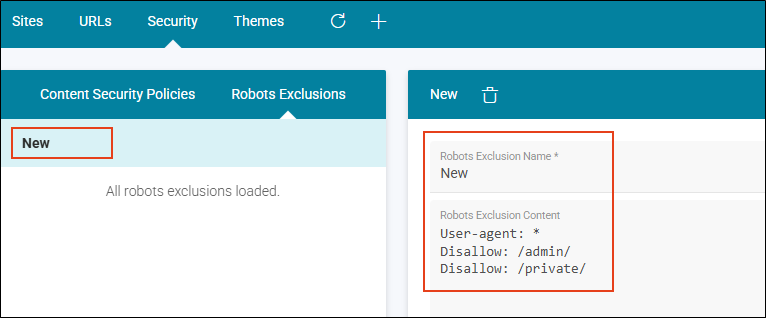

Click Save. The file displays in the Robots Exclusions list.

-

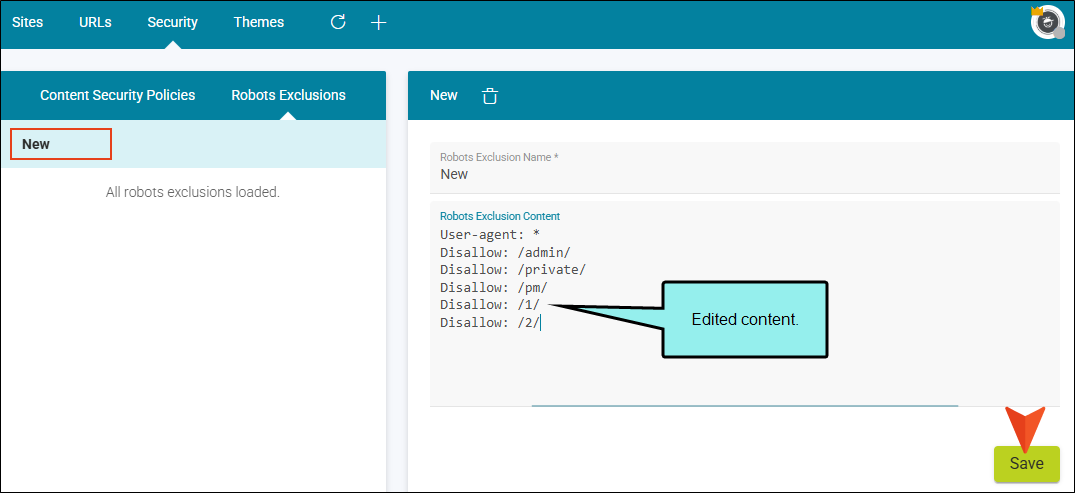

(Optional) If you decide to add or remove content once the file is created, simply select the file and edit the content to the right, and save the file again.

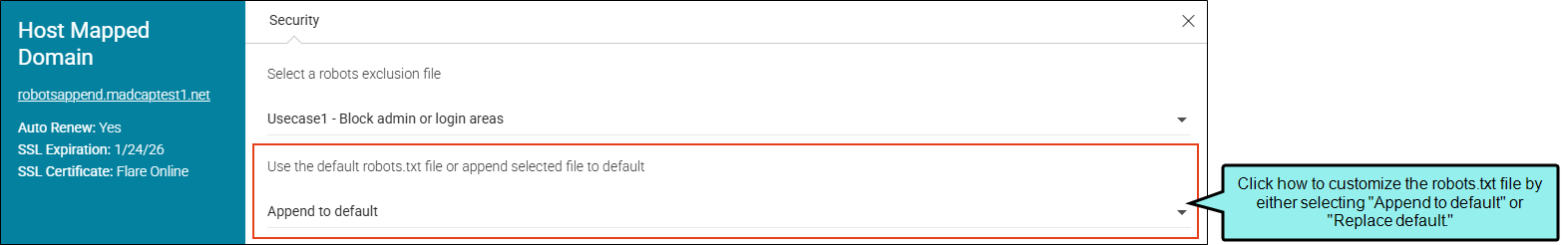

How to Append or Replace the Robots.txt File

Flare Online's robots.txt file lives at the root level of the website. Its purpose is to guide web crawlers to URLs that are accessible and away from those that should be avoided. There is a default robots.txt file for each domain hosted by Flare Online. Also, if you have multiple domains, you could apply a different robots.txt file to each one.

This feature lets you add to the default file provided or you can replace it with a new file. If you click Sites > URLs, and view the site's profile (Securities tab), you can see a preview of the robots.txt file for the domain.

-

On the left side of the interface, click Sites.

-

Select the URLs tab.

-

Do one of the following:

-

Click

next to the domain and select Edit.

next to the domain and select Edit. -

Click the desired Domain Name link.

-

-

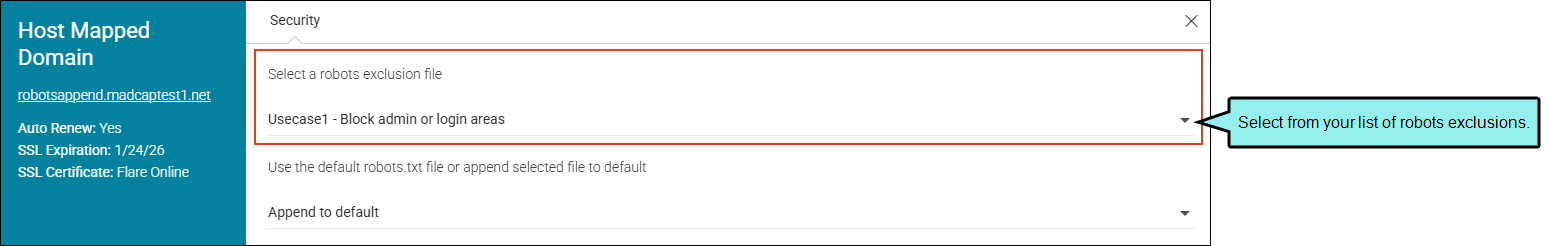

In the Host Mapped Domain profile, click the Security tab. The robots.txt information displays. If there are no robots exclusions, the page shows the default file for the domain.

-

Click the first drop-down to select a file from your list of robots exclusions.

-

Click the second drop-down to identify how to modify the file.

-

Replace default Click to use the default robots.txt (i.e., replace it with a new default robots.txt file). With a new robots.txt file selected, the MadCap auto-generated robots.txt file based on the sites setting option "Include in search engines" will be ignored.

-

Append to default Click to add the selected file to the default robots.txt file.

-

-

Preview the contents of your robots.txt file, and click Save.

Note If you select the "Replace default" option you need to provide a complete and valid robots exclusion file.